Little boat

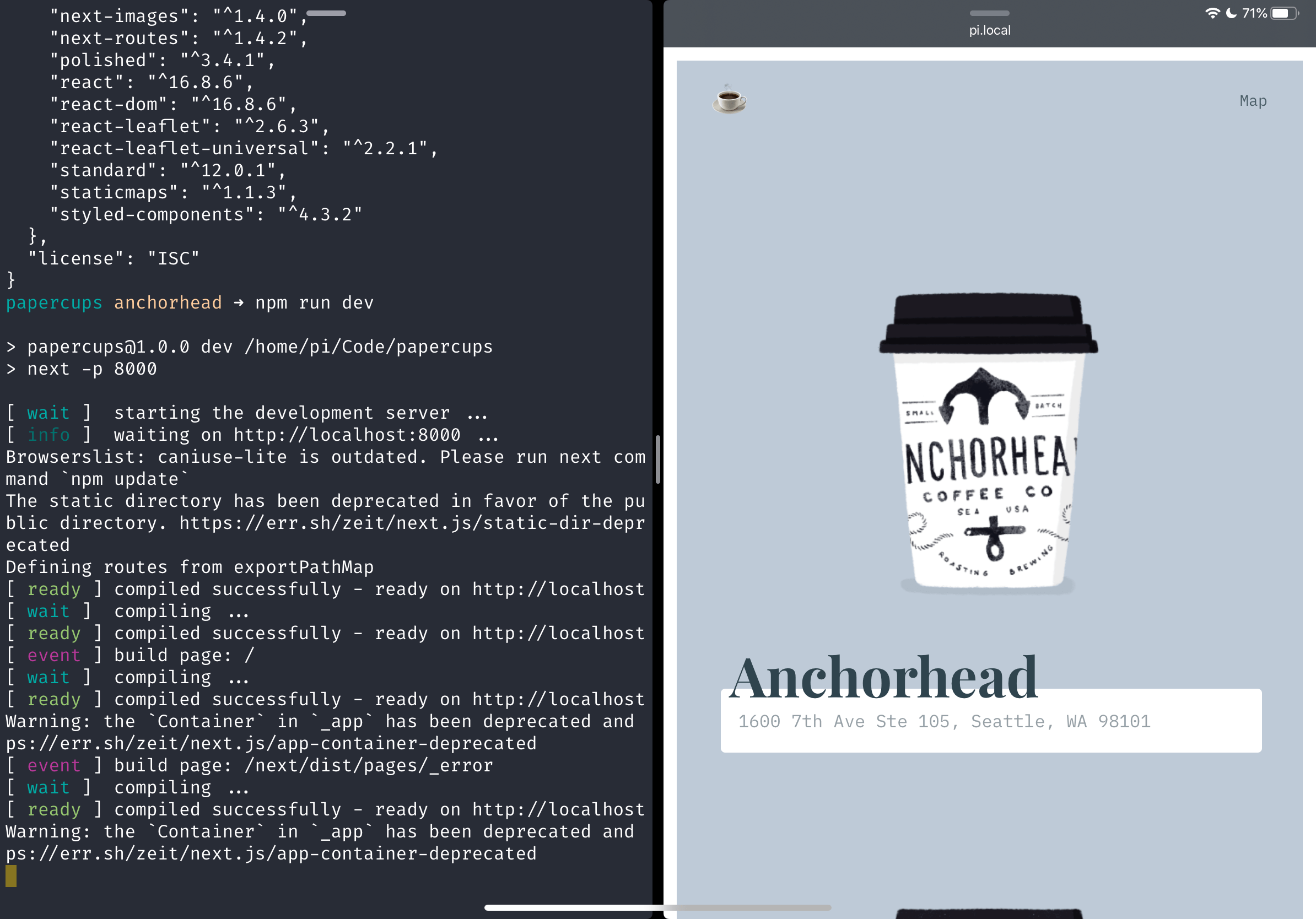

I have been updating dependencies and getting ready to add a few more cups. I always find it interesting how quickly side projects can become stale if you don't spend time on them regularly; this may be worth a longer post.

Building this little lamp has been awesome! We did a TERRIBLE job casting the resin, and the finish of the piece could be a lot better. But now Marco and Alex have the coolest resin lamp 💖

We also had some time to work on other characters from the game.

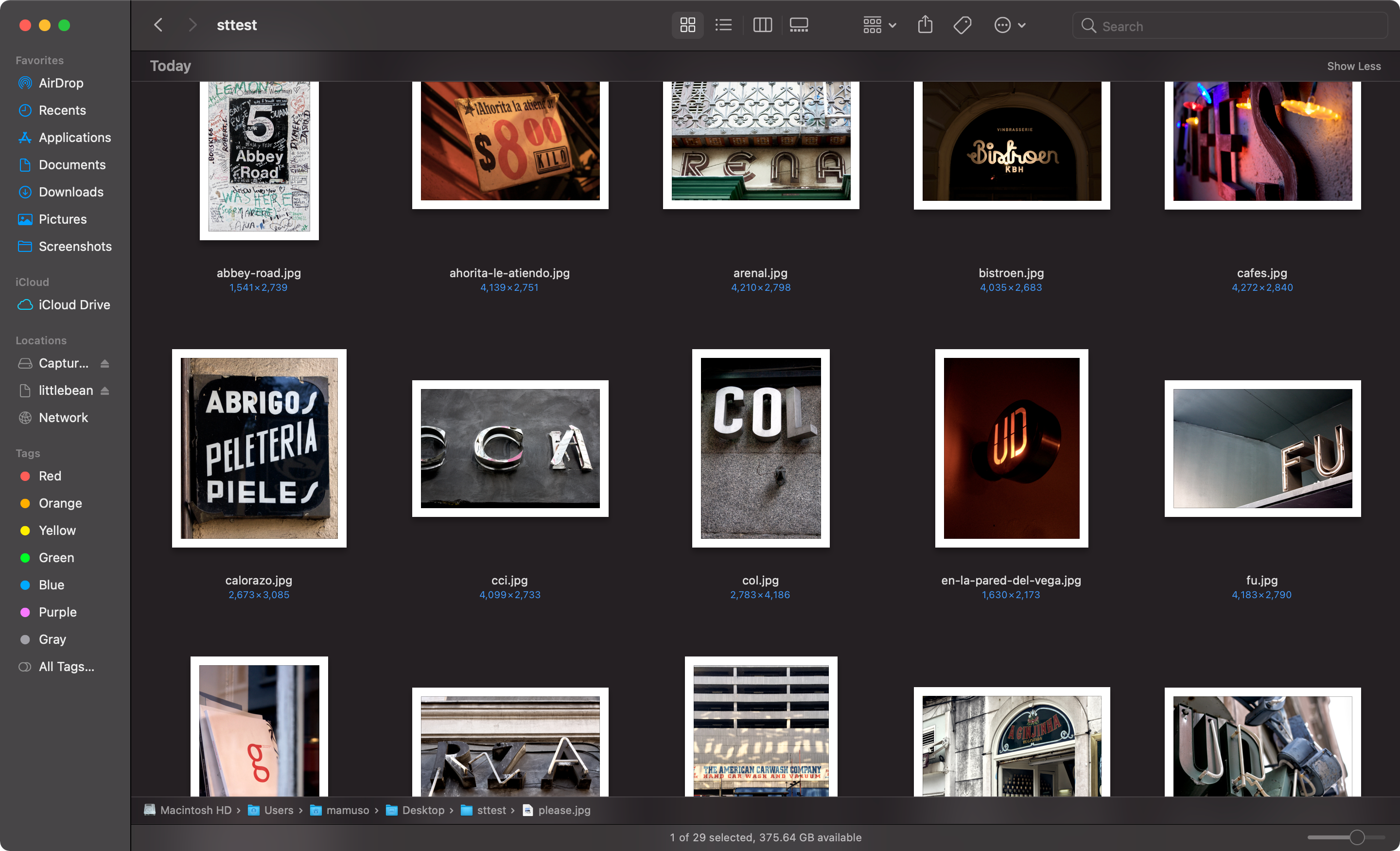

I have tried to merge all my photo collections in a single place for months. Photo storage services make data export incredibly frustrating. Some services send you images and metadata in different files, and it is up to you to merge that information.

Flickr is one of those services. Their data export was waiting in my Downloads folder for weeks, and today I decided it was time to cross this task from the list. After a bit of JavaScript and some ExifTool magic, I got over 1200 pictures ready for review.

I rediscovered that, between 2005 to 2014, I took a good amount of pictures of urban typography.

I know how it started. Back in 2003, I got a copy of America Sánchez’s Barcelona Gráfica. The book clusters lettering, typography, and signals that represent the character of the city. I liked the idea of treating the urban environment as a graphic canvas.

I don’t know why I stopped, though. I enjoyed the letter hunting and still enjoy those pictures, so I created a silly site.

Choosing colors is hard. Adapting the color mode of your website to your users' preferences is (it should) not.

For this deceivingly short recipe, you will need a couple of ingredients: CSS variables and the media feature prefers-color-scheme.

:root {

--text: #333333;

}

@media (prefers-color-scheme: dark) {

:root {

--text: #ffffff;

}

}

element {

color: var(--text);

}

You can play with a simple but functional example in this Codepen; fidget with your OS appearance preferences to see colors change. This foundation also works like a charm in more complex scenarios:

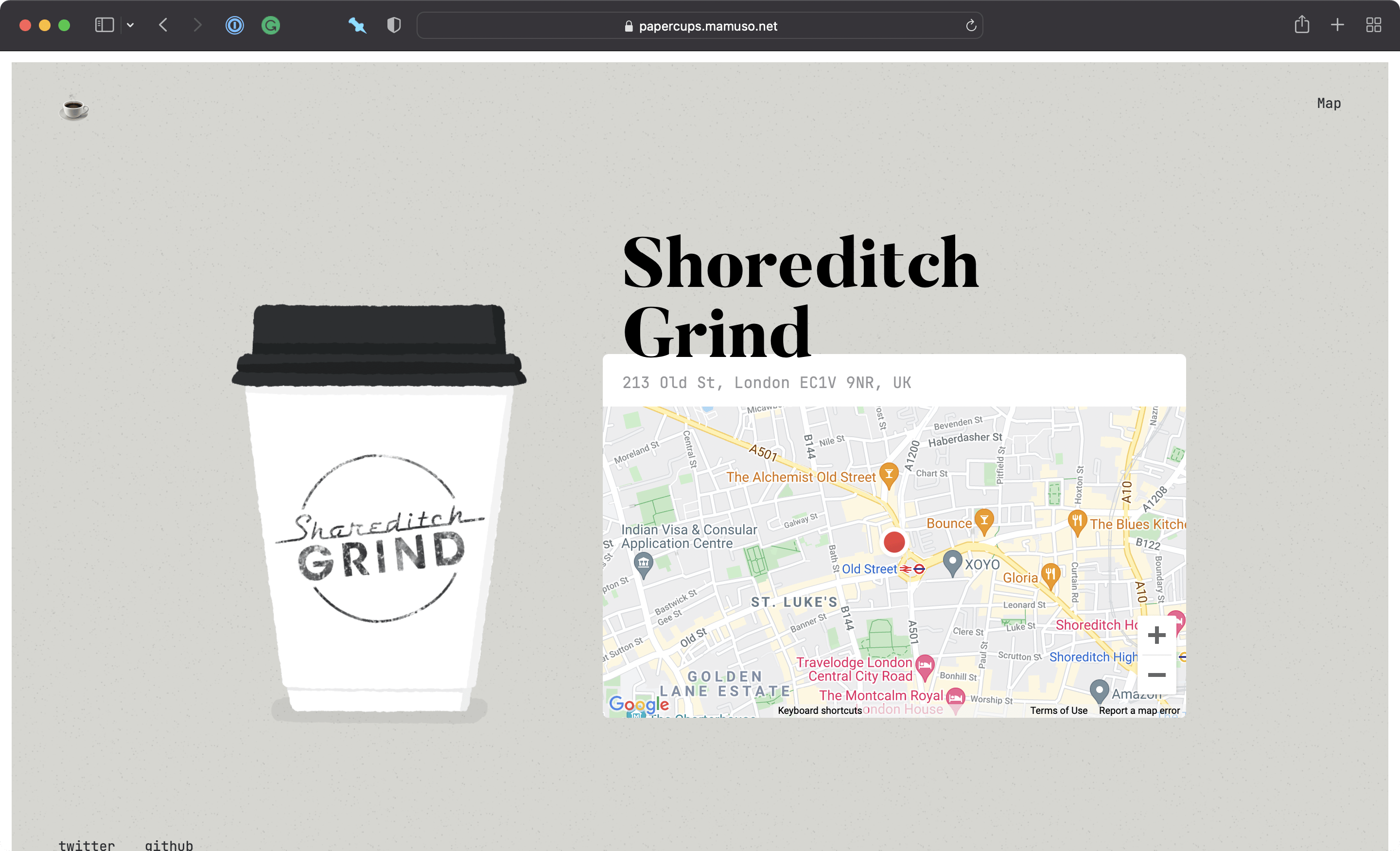

It's been a while without drawing papercups. I had so much fun with this one.

I'm screenshotting programmatically a bunch of very very long pages, and I noticed that some captures were coming back full of white patches. For days I tried to find the source of the problem. Do images have enough time to load? Is there any CSS property in the page that is causing a misrender? What about animations?

It turns out chromium has a hard time processing images over 16384px, so you need to capture-scroll-repeat and stitch the result.

This approach brings other fun problems to the table:

All the screenshots of the current report, grouped by device.